If you have read some of my previous posts on machine learning you might have noticed that up until now all the systems that I have posted about are designed to trade either on the 1H or 1D time frames. Most recently our efforts have been more heavily focused on the 1H instead of the 1D candles, mainly because the 1H timeframe has generated much better strategies in terms of risk adjusted returns – on a variety of other symbols as well – when compared to what we have done with daily systems so far. This has made me wonder whether there is a lot of additional potential in the lower timeframes and what the problems of exploiting this additional potential might be. On today’s post we are going to talk about some initial efforts I have made in coding and finding profitable machine learning systems below the 1H timeframe, what problems I have found and what potential fixes might be available.

–

–

It is no mystery that I have never been a big fan of moving below the 1H timeframe. Although we have 1M data from 1986 to 2016 available at Asirikuy it is significantly difficult to estimate data reliability in time frames below the 1H because of problems like broker feed dependency. Going lower implies having a bigger variability in percentage terms for each OHLC bar, variability that can heavily distort trading depending on what you use. Since machine learning systems can be particularly sensitive to changes in the data it has never seemed like the greatest idea to use machine learning systems on the lower timeframes. Problems stemming from usage of data in this manner are most prominent when using simple linear algorithms such as neural networks with linear activation functions or simple linear regression algorithms.

However there are also some big potential benefits attainable by going into higher frequency bar units. The first is that systems become inherently more responsive since positions can be adjusted and decisions can be made much more frequently. There is inherently a larger amount of information present on higher frequency data – since there are simply more points for the same time period – information that can be relevant for the training and accuracy of predictions carried out by machine learning models. All this potential improvements mean that you have a very real possibility to obtain what may basically be much better trading strategies if you go to a higher frequency, provided you can avoid the problems related with feed dependency.

–

–

The first thing I wanted to test was whether the information hypothesis presented above is really true. Can we indeed obtain better systems simply from going into a lower trading frequency? Is there more relevant information? In order to do this I decided to use one of our most successful neural network frameworks on the 1H. This framework uses an input/output structure based on bar returns and trade outcomes, trades only at a specific hour during the day and always retrains using a moving window of past examples before each trading decision is made. I used the 15M timeframe to make the difference with the 1H really noticeable and I performed a 5000 test sized parameter space to test if I could find any machine learning systems worth trading on the 1986-2016 period.

The tests took much longer at first. The 15M tests takes longer to run because systems can make trading decisions 4 instead of 1 time compared to the 1H systems (since it can trade at the designated hour at 00, 15, 30 and 45 minutes) but this can potentially be removed by either constraining trading to 00. Implementing a parameter to control this leads to an increase in space complexity which is exactly as costly as simply running systems that can train/trade through the 4 different 15M intervals at the designated trading hour.

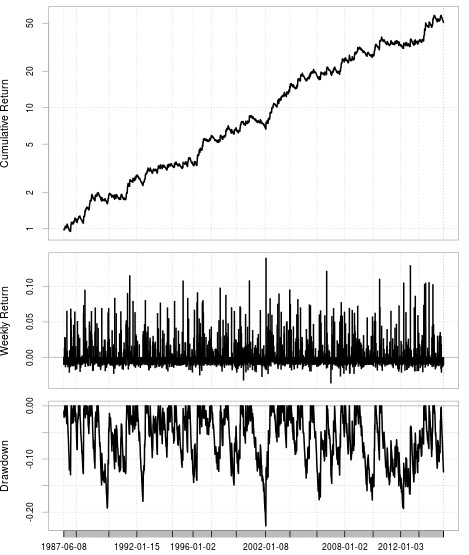

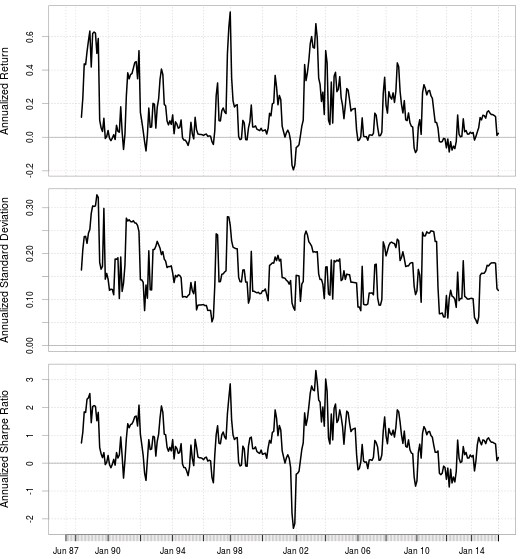

Trading results were indeed significantly better than those on the 1H (statistics for a sample system showed on the two graphs on this post). There was a significant increase in the linearity of the trading systems created with the best systems going from an R² of around 0.98 across the searched space to values higher than 0.995. The increase in the fine tuning of the function based trailing stops – because these adjust stops on every bar and the 15M simply has more bars – also led to better control over trade management which had the nice consequence of making statistics like the maximum drawdown period length better overall on the 15M. Rolling performance metrics also improved significantly with the 15M systems only barely touching the negative rolling 12 month Sharpe ratios and return figures. There seems to actually be an increase in the amount of useful information available on the 15M timeframe compared to the 1H.

–

–

Moving to lower timeframes has the additional interesting effect of potentially reducing data mining bias a lot simply due to the increase in the amount of data available (because finding systems just due to mining strength on more data is harder). It may also lead to the finding of profitable machine learning systems where this has not been possible up until now on the 1H (see my last post on this matter). Perhaps the key to profitable systems on the AUD/USD and the USD/CAD does not lie on some obscure relationships within the data but simply on having information that is not present within the 1H because it is present under a higher frequency timeframe. I am now running some experiments to see whether this is actually the case.

Of course, there are many problems to deal with. Moving lower – as I mentioned before – implies higher data sensibility and this is something I have also seen within my test. The same variability in terms of pips per bar in the 1H applied to the 15M leads to much greater variability due to the fact that 1 pip usually represents a far larger percentage of a 15M bar compared to a 1H bar, which causes significant problems. However this can be somewhat alleviated with the use of higher training epochs and the use of non-linear algorithms, such as neural networks using non-linear activation and output functions. Feed dependency is simply too high on the 15M data to consider linear algorithms even remotely useful.

Bear in mind that I have only done a few days of testing on this matter and there are still many things to research so expect more posts about lower timeframe machine learning systems in the future. If you would like to learn more about machine learning and how you too can build your own machine learning strategies that constantly retrain please consider joining Asirikuy.com, a website filled with educational videos, trading systems, development and a sound, honest and transparent approach towards automated trading in general.

why don’t you test multiple-timeframe strategy?

higher timeframe as set up (long or short) – lower timeframe for trade trigger.

this approach greatly improves entry accuracy even for manually designed systems.

you could take one of your succesfull H1 strategies and see if you can improve it further with entry signals on M1 or M5.

it also saves tons of computational time as you evaluate only M1/M5 bars around your (couple hundred?) signals generated on H1 instead of entire history.

by doing this, you can benefit from lower timeframe data while eliminating some of its disadvantages.