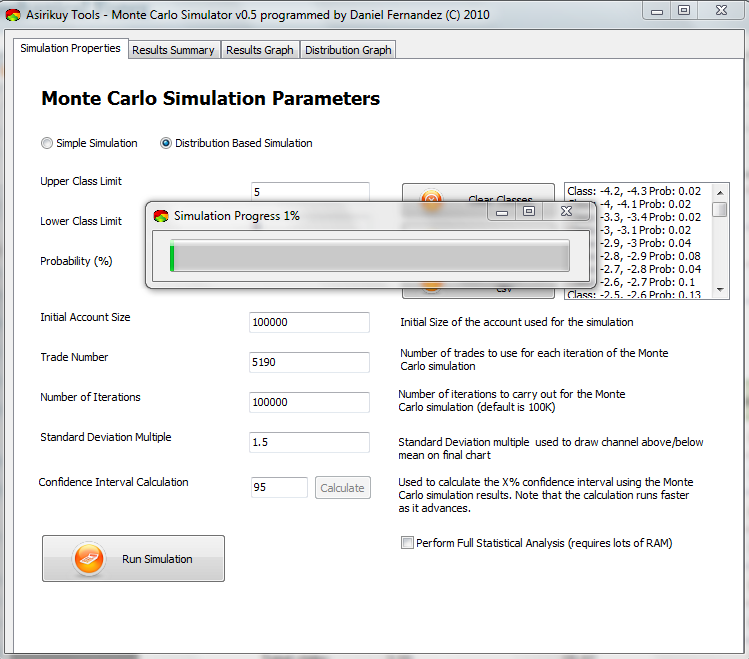

When we started running simulations in Asirikuy one of the first things we came across was the problem of Iteration/Trade size due to the very large RAM requirements of the program. It became clear after running our first tests that – due to the 4GB max memory usage of 32bit applications – our simulations would be limited to about 5000 trades under 100K iterations. However, since this limitation needed to be lifted for adequate Monte Carlo simulations of portfolios and large systems setups, I worked hard on it and came up with a way to make the memory requirements of the simulator becomes very low. On today’s post I will share with you what I learned from this journey and how I solved the problem of very large memory usage within our Asirikuy Delphi based Monte Carlo software.

The problem with the Monte Carlo simulator was very simple, you need to save the outcomes of all simulations so that you can access and compare them to each other later on to create statistics. This means that you need to save a very large matrix or file containing all trading results of all iterations meaning that you need to store about 40 million entries for a 100K iteration 400 trade simulation. For a 5000 trade simulation this requirement becomes extremely high at 500 million entries. Long story short, you need an extremely high amount of memory and you need to be able to search and compare things within your storage REALLY fast.

–

The first thing that came to mind to solve the above problem was some sort of file storage mechanism using a table implementation. An Asirikuy member suggested the use of the “big table” implementation by Synopse but the results were simply not satisfactory as I quickly found out that the “amazing speed” of this table implementation was the mere consequence of in-memory storage, completely defeating the purpose of using a table to avoid large memory requirements. The second thing that came to mind was to implement a true database (MySQL or absolute DB) which had disastrous results from a speed point of view. To give you an idea, the true database implementation carried with it a 100x speed penalty when doing simply storage and a 1000x speed penalty when accessing the database to run statistics. Simply going through 40 million items to search for those that belong to X iteration was a painful process, even with the help of indexes. Now imagine the calculation of confidence intervals where an internal comparison of all trades against all trades is needed and the 40×40 million loop becomes a nightmare.

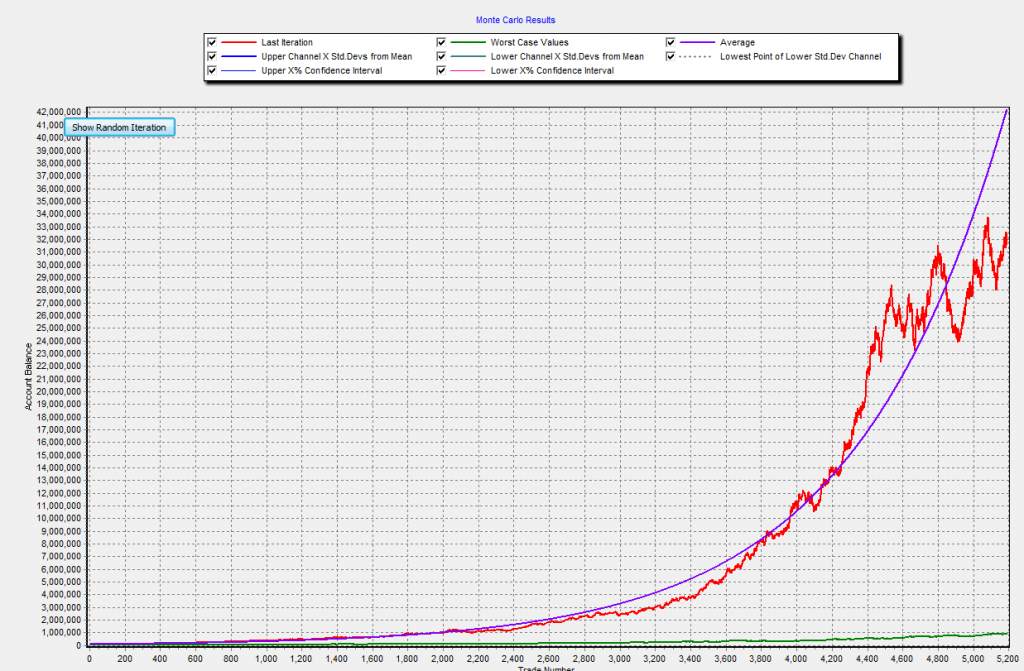

Finally I a light bulb popped above my head and I realized that the current way of doing things was a little bit “dumb” since most of the information we’re calculating doesn’t actually hold that much relevance. In reality we are mostly interested in a couple of statistics that allow us to determine worst case scenarios, particularly the draw down related and worst consecutive losing count values. These values – and the average of all trades – can be calculated in an iterative fashion as the simulations proceed (no need to save values as calculations are done while we move through the simulation) and therefore we can achieve the results we are interesting in with very low memory requirements (just a number of entries equal to the iteration number).

What I did then was a restructuring of the program in order to allow for “simple” simulations that only calculate those statistics that can be achieved without “memory intensive” processing and allow the user to choose to perform “all statistics” if the system being evaluated can be evaluated below the memory requirements. The simple simulations include all draw down related statistics as well as max consecutive losses and profitable trades but do not include standard deviation calculations, confidence interval calculations or probability related calculations (what is the probability of reaching X% profit/loss within all iterations). Overall this new methodology allows users to obtain the basic worst case statistics necessary for trading for almost any trade size and iteration number, allowing our Monte carlo simulator to reach a new level of usefulness.

–

This new implementation of our simulator will be released within this weekend to all Asirikuy members. If you would like to know more about Monte Carlo simulators and how they can help you determine when a system is no longer “worth trading” please consider joining Asirikuy.com, a website filled with educational videos, trading systems, development and a sound, honest and transparent approach towards automated trading in general . I hope you enjoyed this article ! :o)

Hi,

I do not know about your overall algorithmic knowledge, so i might say something very obvious to you.

You mentioned looping algorithms, but you clearly know only a few MC simulations results are worth keeping and useful to be compared. Moreover, you also know by which criteria to recognize these important result sets. So why not making your algorithm have a n*log(n) complexity instead of n*n ? You could for example, after each simulation, recreate the heap (heap sort) of your result sets according to some profitability criteria, and then only use the few results sets (now sorted) from the top of your heap to be compared to the result set you just came by. Or add it and recreate the heap after, if the recently calculated result set is not worth it, it will be dug into the heap.

Also, Matlab could help since it uses a vector based language. This might make the comparaison of results much more easier and faster to program, in 1 line of code per mathematical operation instead of creating iteration loops over arrays or matrices yourself. About calculation speed and running the engine, i can not tell, but i know that Matlab can take your code and generate a binary of it, which you can run without Matlab, or even transform your code into C. So speed might not be an issue compared to gained productivity ?

Hi Alex,

Thank you for your comment :o) As far as I could see some calculations within the Monte Carlo simulator need full n*n complexity to perform certain statistical tasks because they cannot be carried out without a full iterative comparison between all trades. The possibility to save results across more limited loops is not viable since you need to actively compare each trade with every other trade within the run. Sure, there is the possibility to carry out these statistical calculations by simply rerunning one simulation for each comparison (which saves memory but increases computation time). There are obviously some ways in which the current simulator can be improved which I will implement in the near future :o), something I would rather do on Delphi. Regarding MatLab, I think I have already explained under a few of your comments why I do not intend to use this within Asirikuy. Thank you very much again for your comment,

Best Regards,

Daniel

Ah, I did not received any notifications about the other comments, so I guess I’ll have To search manually for the articles where I left them. Anyway, I’ll have the occasion to get a more in depth look at your all trading framework and understand the ins and outs since as you probably saw, I subscribed this weekend to Asirikuy.

By the way, joke aside, I had no idea Delphi was still alive and maintained !