Through the “neural networks in trading” series of posts I have discussed several aspects of neural networks in trading and how their use can lead to the creation of profitable strategies and to the potential improvement of any strategy through an “intelligent intervention” within money management. Today I want to talk about one of the few things all my developments with neural networks have in common and why I consider this piece so essential for the development of reliable NN-based techniques. This fundamental concept – the moving window – is a central but not obvious piece of the neural network puzzle which has to be taken into account under any NN construction in order to ensure that the end result will be a viable long term positive contribution to your trading arsenal.

When we develop any neural network solution we must use some data to train it so that it can “learn” and throw the output we desire. For example if we are attempting to build a network which predicts the weekly close of a currency pair we need to feed it some data correlated with this desired output which could be the previous weekly closing prices of other pairs or instruments. However the question of how much data to provide comes up as the amount of data available naturally increases as the markets move forward. Should a neural network be trained on all data available or should it be trained on a limited sub-set of data?

–

–

There are several problems with the “all in” data approach which simply uses all available inputs for the neural network. The first and most obvious problem is that the results obtained in backtesting using such a technique will not be a valid proxy for future results as the future calculations will be fundamentally different. For example if you obtained profitable results from weeks 100 to 500 using your neural network the calculations and training being done will be completely different from 500 to 1000 since the number of inputs will increase tremendously. As the network’s training set size increases the fitting complexity becomes greater and you’ll find that the procedure previously used might not be even useful at all. It can also be the case that the network may “fit better” a previous range of data which is now highly irrelevant, leading to bad overall results.

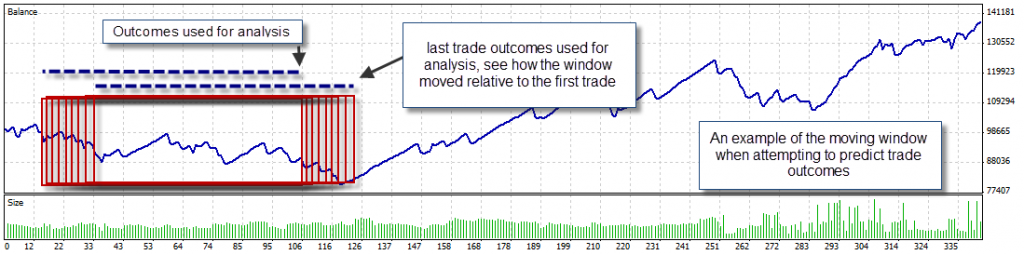

What is the best solution then? I have found out that the most useful technique when building NN based strategies and strategy modifications is to use a “moving window” where the data used for training is not constantly increasing but a “block” of data which has a constant size. For example on the above example a moving window would be made up of the last 100 weeks despite of the overall available weekly data. This means that the system will always be trained to the last 100 available pieces of data and therefore it will maintain a “fit” to the most recent and relevant conditions.

The advantages of using a moving window technique are quite significant. Since the size of the training set is always constant your 10 year testing results will be relevant as it will imply that the predictive capacity of the “standard technique” works. The training is always done with the same number of inputs and the convergence to a solution is always similar through the whole testing period. A moving window implies that test results are bound to be a more valid proxy for future results as the updating/training process used in the future will be equal to the one used in testing regarding most parameters, as there is no training size set increase there will be no potential over fitting to past irrelevant values and there will be no problem dealing with training convergence going forward as the size of training set remains constant and therefore the fitting problem is largely similar.

When using a fixed moving window you have the advantage of having an additional confidence in the parameters of your network. For example if the neural network is able to find a very good fit with one hidden layer and 50 neurons on 500 epochs for all the X input number training exercises within a 10 year testing period then you know that in the future this exact same network topology and training procedure is bound to work. When you use all data instead of a moving window as the data complexity increases you may find the topology no longer works or the training procedure no longer reaches a converging point of adequate characteristics. The moving window approach is therefore your best choice when attempting to build a reliable neural network based technique. It also worth mentioning that the inclusion of each known output in trading includes a large amount of inputs so even if you train the network only in the prediction of the past X values you’re in fact using data as far back as X+Y+1 where Y is the amount of inputs correlated with each output. For example if I train a network on price outcomes for the past 50 trades with each trade using the 50 last trade results as inputs I am in reality using information as far back as 101 trades in the past as to introduce trade 50 I needed to use information up to trade 101 in the past (because to input the outcome of trade 50 I needed to tell the network its input values (trades 51-101)).

The size of this moving window is also an interesting parameter but choosing one might simply be a matter of trial-and-error under a wide variety of circumstances to which your network will be exposed. On the above example if my network was going to be used on 10 instruments I would test the moving window sample on half of the available data and then choose the one which performs better for all pairs on average. After I do this I would then run an out-of-sample test on the other half of the data to see if this is the right approach. However bear in mind that I never do a change of this moving window period after the out-of-sample test as this is done only for verification (changing the window because of an out-of-sample test will make it in-sample). If the moving window doesn’t work in out-of-sample then I discard the whole network as it simply doesn’t hold the necessary level of robustness.

In the end you’ll see that a neural network technique based on a moving window provides the best out-of-sample results and is bound to be a very good proxy for the future. Using a moving window gives you more certainty about the quality and overall results of your network topology, also ensuring that your results are always freshly updated to the latest input variable behavior. If you would like to learn more about neural networks in trading and how to evaluate and test your own automated trading systems please consider joining Asirikuy.com, a website filled with educational videos, trading systems, development and a sound, honest and transparent approach towards automated trading in general . I hope you enjoyed this article ! :o)

Hey Daniel, great idea! What I also think will work is to train the NN on a specific pattern that you want to trade (wedge, flag etc), and never train it again. If it works succesfully in backtesting then the results are also consistent.

Hi Franco,

Thank you for your comment :o) Pattern finding is an interesting area of research however it is quite difficult to train the NN for this as you need to do a lot of work in how you parametrize patterns. Since the number of inputs needs to be constant and pattern lengths are usually very variable you need to define some sort of criteria that can define patterns that can happen on X candles without the need for this number to be explicit anywhere (quite tricky). For example you would first need to define the number of candle within an X% ATR range and then through high/low/range/average candle size/deviation, etc feed the network. You also need to “handpick” all patterns yourself to build and train the network which is bound to be extremely time consuming. For these reasons I will not be exploring this option but sure, feel free to make any suggestions or explore this area yourself :o) Perhaps I might be exploring simple candlestick pattern filtering based on previous price action and trade outcome, however we’ll see if I get there! Thanks again for posting,

Best Regards,

Daniel

Hey Daniel, thanks for the reply!

I hear what you say, sounds difficult to make ANNs work. I just started to learn how to program this today in C# using the .NET wrapper, I’m going to play around with it a bit trying to fit complex functions etc then I’ll move forward to creating strategies. It’s going to take me a while but I’ll be joining your insane quest soon :)