It isn’t a mystery to anyone involved in the area of Neural Network trading that the design of systems based on this learning technique is neither trivial nor intuitive. Through the past year I have written many articles on this subject which have addressed several aspects of this issue as I have walked the journey from complete ignorance to the building of practical trading techniques. Up until now my only successful system-building venture had been the creation of the Sunqu trading technique which is able to generate positive trading results in simulations based entirely on daily bars, learning certain particularities about closing prices and comparing this to results on a parallel neural network. However I was intrigued by the building of less complex and more robust neural network systems and therefore I set myself to achieve the building of a trading tactic which would use more highly organized input and would achieve results from more intuitively derived associations. Through this post I will talk about the system I achieved after doing a lot of analysis and tests in this direction, building beyond Sunqu and greatly expanding our possibilities with Neural Network based strategies. You will now learn a little bit about my latest friend : Paqarin :o).

Before going into what Paqarin does and why I believe it is a great step forward from Sunqu (and a great compliment to its trading) I would like to explore some of the short commings of this first success in Neural Network strategy building. I would also like to say that this new strategy doesn’t make Sunqu obsolete because – despite this new system having some improvements – Sunqu continues to be a good strategy on its own right and a completely different approach when compared with our new system. It is however clear that Sunqu had some characteristics which I wished to improve upon. The first of them was the overall complexity of the strategy which was quite high and difficult to understand, since the training of the network and the actual results come from quite obscure association (even when considering the inherent black-box nature of the internal associations) and the second was that training of the networks took a large amount of time and reproducibility was not perfect due to the nature of the actual associations made by the neural network. Moving onto the next Neural network project I wanted to create a strategy which had clearer associations, had more reproducible trading and which could be back-tested in a fraction of the time it took to back-test Sunqu.

–

But how could I do this? The first thing I did was to search for ways in which I could normalize data in order to create an input which I could then manipulate to create clearer associations inside the network. It is clear that you cannot normalize the values of candles as a function of past values (such as the last day’s range) because the values for the next candle may be outside the boundaries you have set. The solution to this problem was quite simple – and I will hint that it involved looking at a the “big picture” – but I will keep the specifics of how this problem was solved for a video inside the Asirikuy community. After the problem of data normalization was solved I could then get proper inputs for my network which I could easily compare and use to train my neural networks. Training went perfectly well and I could get fast and almost perfect matches with past data but…

All trading tests failed with spectacular losses or extremely small edges. As it has been my experience in the past with neural networks, whenever I get good matches with past data any future predictions fail. This seems to be caused by a “fit to noise” which causes my neural network’s predictions to become exceedingly random, failing to predict anything true about future market behavior. As I have mentioned extensively in the past it seems to be the case that over-fitting is done very easily when training neural networks to predict financial time series and therefore the trick – and this came after tons of sweat and testing – is that a neural network which is able to predict successful outcomes in financial time series is trained in a very “relaxed” fashion. You simply cannot train your data to fit what you expect because the network needs to understand some very general aspects of the data which are promptly eliminated when the network is overtrained. But how can you control the amount of trading you introduce into a network to fit the general aspects of the market but avoid the noise? The answer – whose details I will also leave to some Asirikuy video in the near future – involves the use of a much simplified training topology which restrains the possibilities the neural network has to learn. Less is more in the world of neural networks for financial time series :o)

But how does Paqarin trade? As I mentioned before I wanted to get clearer associations and this came from simple comparisons involving market movements and directions. I will not go into any deep details but the overall logic of the neural network involves the use of daily OHLC values and the normalization criteria which I have kept out of this article (although it is nothing fancy, I assure you). The biggest inovation with Paqarin, which is what I believe makes it such a great improvement over Sunqu, is that by making the network train only on certain days of the month I have achieved to reduce the back-testing time tremendously. Does it introduce a selection bias? No because the system can be profitable regardless of the day in which you do this. The system can generate profits if it trains on every Monday or on every Friday but it drastically reduces the time it takes for the system to carry out simulations because trading is tremendously reduced. Another thing is that the more simplified logic eliminates the need for large committee sizes to increase reproducibility so Paqarin generates results with decent reproducibility even if the amount of committees and the number of networks inside of them is quite low. In fact you can just use 10 networks to reproduce pretty stable results, while higher numbers don’t seem to help a lot more. As I have mentioned before this problem seems to be related with the ANSI C random number generator which I have been hoping to change for a Mersenne twister type algorithm (within the next few weeks I hope!), to test whether this is or isn’t an issue.

–

–

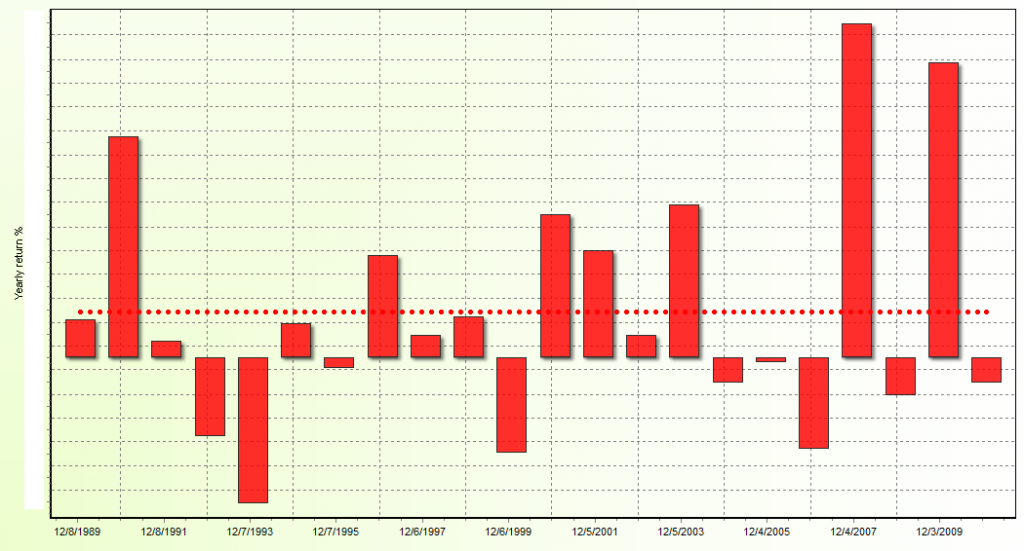

Another thing I want to mention – just to end this small introduction – is that Paqarin, is by no means a holy grail. As you can see from the above this system also suffers from deep periods of draw down which you will need to go through if you would want to trade this strategy. Its net profits and profit to drawdown ratios are also not as high as for Sunqu, so you would also have less expected profitability with this system. Another important thing worth mentioning is that Paqarin can be used to build portfolios with different instances which train on different days of the month, something which generates curves with clearly different drawdown period lenghts and depths although all of them will still be quite profitable on their own. As you can see this is a big step for us in Asirikuy because it shows that NN techniques can be built in a whole variety of ways and it also shows that you do not need a lot of complexity or effort but you just need to think smart and give the network the right inputs and ask the right questions :o).We can create systems which learn iteratively from the market and we can do this using several different techniques.

Testing is still going on and I would expect to release Paqarin within the next few months to the Asirikuy community. Before release I would want to test this with a new random number generator, generate tests for other pairs (such as the USD/JPY and the USD/CHF) and implement some cool visualization features such as Kohonen self-organizing maps so that we can get a graphical representation of how neural network weights vary as a function of the different random number weights in the beginning of the training procedure. Paqarin is the next step in neural network development and I would also hope to couple it with the NNMM technique mentioned within the last post (which should be available soon at Asirikuy :o)). Of course if you would like to learn more about NN trading techniques and how you too can develo psystems through the use of sound understanding and education please consider joining Asirikuy.com, a website filled with educational videos, trading systems, development and a sound, honest and transparent approach towards automated trading in general . I hope you enjoyed this article ! :o)

Daniel-

Congratulations on your latest achievement!

As you know I have done some work with neural networks recently, and can certainly identify with your comment about “spectacular losses” resulting from some of your failed efforts. Coming up with a strategy that is both simple and yields profitable results is extremely difficult.

And I continue to think the answer lies in pre-processing of the data, something you didn’t do at all with Sunqu which continues to baffle me. It sounds like you have come up with a unique method of scaling or pre-processing the data here, or perhaps segmenting it by hour of the day or day of the week.

I think sometimes we expect too much from neural networks based on their computational ability and breaking complex problems down into much simpler ones is the direction to take.

Looking forward to reading about it in detail on Asirikuy.

Take care,

Chris

Great news! Hope we will have more of this type of systems in the future which totally obliterates the optimization problem, which is an onoging struggle inside Asirikuy :)

Glad you chose to use the Mersenne Twister, excellent random number generator and very fast

interesting news – maybe it would be possible to adapt a method for randomizing like is used on the web site/service random dot org where atmospherical noise is used:o)

thanks for the article!

//Jacob