Machine learning is one of the most interesting ways we currently have for the generation of trading strategies at Asirikuy. If you have read my blog you may know that we have done a significant amount of research in this topic, from systems using trade outcomes as outputs to strategies that use a variety of different indicators as inputs. However the current problem we have is related to the cost of doing machine learning simulations and how this radically reduces our possibilities to find and properly test these systems. On today’s post I am going to talk a bit about this problem as well as how we plan to change our machine learning mining methodology in order to use the power of GPU (Graphics Processing Unit) technology to achieve much faster simulations. Granted we will need to employ a few tricks to do this, tricks that I am going to be sharing with you on this article.

–

–

First of all let me say that our individual machine learning simulations are not slow by any means. Currently it takes us around 40 seconds to perform a 30 year simulation of a constantly retraining neural network trading system on 1H data. This means that performing single back-tests is no problem and this is what has allowed us to test a significant variety of ideas during the past year. The problem however comes when we decide to mine large machine learning spaces for systems since the parameter spaces we need to search are very big and we also need to perform simulations on random data to ensure that our systems have a low probability to come from chance. This means that we need to mine the space not only once, but potentially 20, 30 or 40 times until we are able to find a convergent distribution for the score statistic of systems found on random data.

Right now we are restricting our spaces to mine only around 10-15 thousand systems per experiment but this means that the number of systems with historically high Sharpes is expected to be very small and therefore each experiment only adds 1 or 2 systems to our machine learning repository after checking for a low probability to come from random chance. Given the fact that it might take 2 months to complete an experiment at this pace – since it takes the 8 cores of an i7-4770K roughly a day to complete a single random run – it is extremely slow and inefficient to build a machine learning portfolio in this manner. So what can we do about it?

–

–

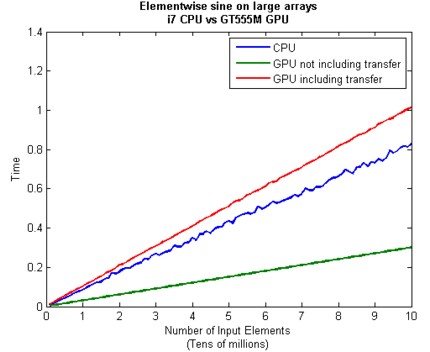

Ideally you would want to perform simulations like this in some manner that is much more highly parallel and efficient, something like a GPU. The problem with moving these simulations to the GPU is that these systems are not very simple price action based strategies – which we have moved to full GPU mining – but they are complex machine learning strategies that rely on rather sophisticated C++ libraries to do the job. Since we’re not interested in making the machine learning itself run in parallel but we want to have parallel back-testing execution of separate systems we would need to implement all the machine learning functions within openCL kernels. Since openCL cores are much slower than an i7 core and particularly they are very bad at the non-sequential memory access required to constantly retrain a machine learning algorithm it becomes absolutely clear that moving the whole thing to a GPU is simply a big no-no.

But if there is a will there is a way (thanks to James for contributing his initial thoughts on this idea on the forum!). Given that the machine learning part is the part we do not want to run within the GPU but given that this is just a very small part of the variations that we subject the trading strategies to it makes sense to calculate machine learning predictions using a CPU and then use those predictions to carry out system simulations on the GPU varying all the other things outside the ML algorithm that you would want to test. So this means to run all variations involving the ML model within the CPU, generate arrays containing all the predictions and then perform the larger scale mining involving things like ensemble construction, hour filters, stoploss variations, trailing stop implementations, etc on the GPU. This means that you can vary predictions to generate something like 540 machine learning prediction arrays (basically 540 variations of the machine learning algorithm) and then expand that to 3 million systems using variations in other parts of the strategy that can be tested with the GPU.

–

–

In the end you have a compromise between both worlds. You have a very slow initial step where you have to generate predictions for the variations of the machine learning algorithm for all bars within the data and then you have a very fast step where those predictions are used in GPU based simulations to actually mine for trading systems and perform the data-mining bias assessments performing all strategy variations that are not related to the machine learning algorithm. The result is that you expand your potential universe of strategies by a factor of around 100x since you no longer need to explore around 10-15K system spaces but you can actually explore a 3 million system space within the span of probably less than a week. This means that instead of adding 1 strategy every month we will potentially be able to add a few hundred. Of course these simulations will involve the transfer of some significantly large prediction arrays, which can be quite detrimental to GPU performance (see the image above). I will talk about our actual performance gains within a future post.

As you may suspect I am currently working on this implementation at Asirikuy – generating the first set of prediction files as I write this post – and hope to release some initial results to the community later during the week. Of course if you would like to learn more about our community and how you too can design and create your own machine learning systems please consider joining Asirikuy.com, a website filled with educational videos, trading systems, development and a sound, honest and transparent approach towards automated trading.