During the past two years it has become clear to me that a key to successful algorithmic trading is the ability to generate systems in a reliable, consistent and effective manner. In order to achieve this goal I have created a price-action based system generator, the Kantu program, which allows me to generate strategies that fit some predetermined statistical characteristics. However one of the big limitations of Kantu has been the speed of its system generation – which although very fast is still limiting – which hampers our ability to explore multi-instrument systems and lower timeframes as the computational cost becomes too great as the number of bars increases significantly. In order to solve this problem we have implemented a new system generator (which we call PKantu) that uses OpenCL in order to take advantage of modern GPUs in order to massively parrallelize the system generation efforts. Within this article I want to share with you how this idea works, how we have implemented it and how it dramatically improves our generation capabilities. I would also like the opportunity to thank Jorge – and Asirikuy member – who has helped greatly in the coding and testing of this new implementations :o).

–

–

The initial Kantu implementation (see here) is a traditional program coded in Lazarus (freePascal) that uses the CPU in order to generate trading strategies. The program has multi-core usage capabilities but its throughput is limited by the small number of cores within a CPU as well as the additional processing power required to perform user interface updates and synchronizations. Overall this implementation is fast (60 ms for a 1H, 25 year test) but the gigantic size of the available number of system combinations makes this speed too low as exploring lower timeframes or multiple symbols successfully would involve at least several billion combinations which can last weeks or months under the current Kantu setup (depending on how low the timeframe is or how many symbols you use). Optimizing the CPU usage is unlikely to bring any improvements above 20-30% so we needed a radical solution to get ahead of this problem.

There are mainly two routes that we could go through in order to solve the above problem. The first is to massively parallelize a CPU implementation across many different computers. This involves either some form of cloud computing (many users contribute to system generation) or the use of a large computer cluster. This route is energy intensive, expensive and difficult to implement since users would need to both agree on what they want to achieve and then secure computational resources to achieve this goal. Overall problems coming from failures in network/cluster nodes, data gathering, etc could prove to be too demanding compared to the obtainable reward so this was definitely a road we did not want to walk.

–

–

The second idea involves the use of General Processing Units or GPUs. These cards – which are popularly used for graphics – have been optimized for high parallelization and contain a highly optimized yet limited set of instructions that can be used in order to carry out general process calculations. Using the OpenCL language standard you can “easily” write code that uses the power of the GPU for a non-graphic computation using the C language. Since the original Kantu implementation is not suited for the use of OpenCL (the way it’s implemented in FreePascal makes this unattainable) we had to re-code the core Kantu framework on another language in order to take advatange of OpenCL.

Since the idea of this new implementation was to make things as fast as possible, we decided to go with a simple python/pyopencl implementation that works entirely from the console. The pyOpenCL library contains very simple-to-use bindings for OpenCL so we could easily build our PKantu core-code using this implementation. Writing C code for OpenCL is unlike writing general C code, as the OpenCL compiler has some heavy limitations that require some use of your imagination in order to do things that you could more easily do in standard C. For example in OpenCL you cannot use the realloc, malloc, dealloc functions commonly used to shape dynamic arrays so you must pass arrays with predefined sizes and ensure that you have enough memory within your GPU to handle all this load.

–

–

It is also worth mentioning that the clock of the GPU processors run much lower than your usual CPU core. While my i7 clocks at about 3.4GHZ without any over-clocking, the cores of high-end GPUs barely make it close to the 1 GHZ clock frequency. This means that a single GPU core generally has a poorer clock performance compared to your usual CPU, meaning that the generation of a single system can take longer than for a CPU. Clearly when doing serial computing the GPU is no match for the power of the much-more-powerful-per-core CPU. The real power of the GPU comes from the parallel computing power it has, with a GPU having dozens of cores and often thousands of stream processors, your advantage comes from the fact that you can process systems in batches of hundreds to thousands while on a CPU you’re limited to a number of systems equal to the number of available threads (8 on a quad-core i7). This means that in reality I can generate about 100-1000x more systems compared to the traditional CPU implementation.

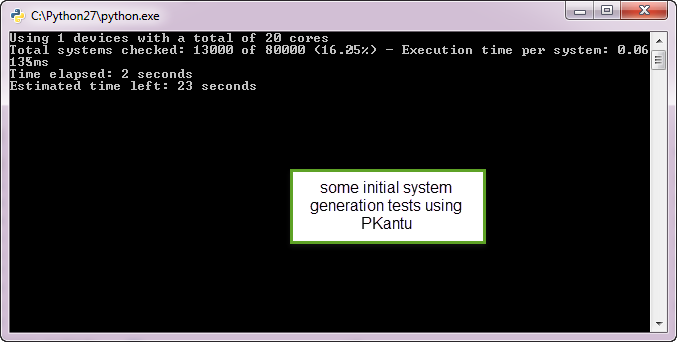

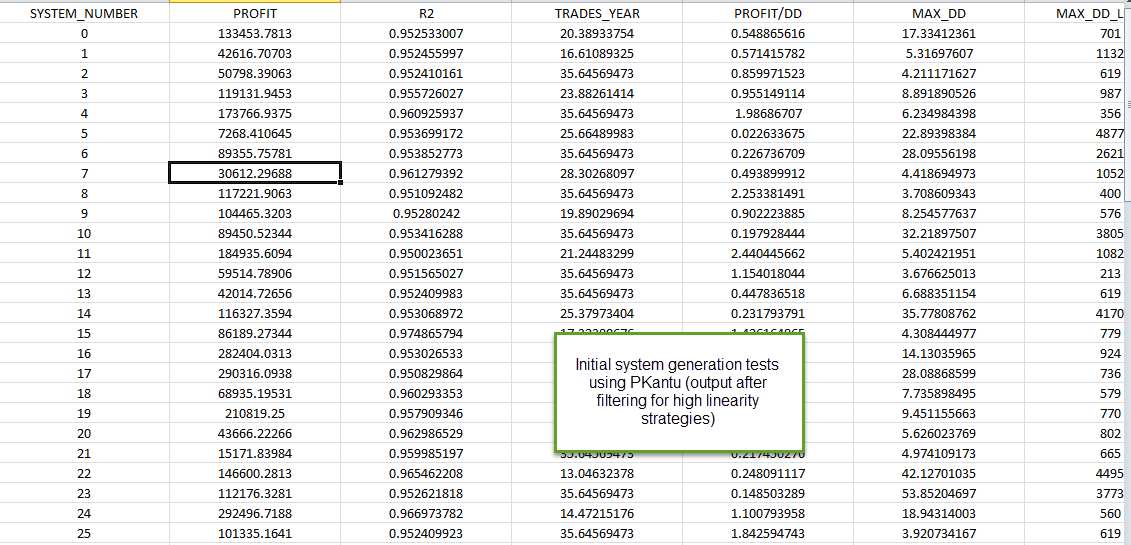

After coding the basic core of the program and implementing most basic functionality we have been able to run some initial benchmarks and tests. Although we haven’t received our powerful GPU cards yet (I’m waiting for my Radeon 7970 to arrive!) our current implementation already shows dramatic results on our low to medium range GPUs. System generation on the daily timeframe went from about 3ms for a 13 year system to about 0.05ms (about a 100x improvement) while the systems/second generation increased even more dramatically thanks to the massive parallelization of the GPU. Do you want to generate 1 million systems? No problem, it takes PKantu less than a minute. Did you think that 10 billions logic space couldn’t be explored? PKantu can probably do that in a day. That said, we haven’t even ran experiments on high end cards – which we are waiting for as said above – so the above numbers will potentially increase greatly once we have this additional power :o).

PKantu is already opening up the road towards a new age in automatic system generation for our trading community. There is no other system generation implementation in the market which is as fast or as efficient (that I am aware of) so this will give us a great number crunching edge over any competition using data-mining (at least against those who do not use a GPU). PKantu is still in heavy works but I seek to make it available inside our community within the next month :o) If you would like to learn more about automated system generation and how you too can generate your own system portfolio please consider joining Asirikuy.com, a website filled with educational videos, trading systems, development and a sound, honest and transparent approach towards automated trading in general . I hope you enjoyed this article ! :o)

“there is no other system generation implementation in the market which is as fast or as efficient (that I am aware of) so this will give us a great number crunching edge over any competition using data-mining”

Crunching edge does not translate directly to system edge, I hope you agree to that.My associate developed software in C++ 10 years ago that is much slower than Kantu but does a great job in identifying systems that stay profitable for up to 3 years. I do not care to wait for an hour if I get a system that deals effectively with issues like DMB and curve-fitting. These were the first problems my associate solved before he programmed his data-mining program. It seems you are going backwards. If you do not solve the DMB problem you will end up providing more ways to lose money but now a lot faster. The reason that you have not solved the DMB issue in Kantu is that the curve-fitted systems Kantu generates always appear significant because they are outliers by design. To effectively estimate the DMB you must consider all the systems Kantu considers in its way of selecting a best performers. Otherwise you are just fooling yourself. If you do that you will find out that your DMB is extremely large and no system generated the Kantu way can be declared significant.

Honestly, I get the impression that you are trying to mask fundamental issues in Kantu with tech advancements. I do not care if Kantu takes 2 days to run as long as at the end I will get something that is profitable. A $10 worth of extra electricity cost is insignificant when compared to tens of thousands in account losses? I hope you understand my philosophy and I strongly believe that your emphasis in speed gains when fundamental issues have not been solved even remotely is irrelevant.

Hi Bob,

Thanks for posting :o) Yes, clearly number-crunching edge does not translate directly into a market edge (that depends on the user!) but it’s definitely good to have faster mining implementations with more possibilities. At the very least it provides us with an ability to perform a lot more experimentation.

How do you know I am not already doing this? You should consider – before you judge what I do – that I do not post everything I do concerning my development/selection methodology. In the same manner that you do not post your exact detailed development methodology within your comments, I do not post everything I can do within blog posts. Perhaps you infer that no system generated by Kantu can be declared significant from what you read in my blog, this does not mean that I cannot generate systems that can be tested to be significant using Kantu and others tools within our community. When reading my blog, consider this as a small part I share concerning my overall journey in trading but do not take this as a literal journal of all my trading developments.

I am also not masking “fundamental issues in Kantu”, the software is available as it is, how successful or unsuccessful a person is with it depends on how that person uses the information created by it. It depends on how you deal with all the issues you have so diligently raised within your comments. It’s a tool, like a hammer, how good you do with it depends on how much you understand it. The manual, software page, etc are filled with warnings saying that Kantu can lead to loses if the people who use it do not understand what they are doing. The technological improvements are important in my view and I do believe they improve the usefulness of the software for me and others within our community.

You have made some good points in your comments, I always take them into account within my data-mining process. Thanks again for commenting Bob,

Best Regards,

Daniel

“How do you know I am not already doing this? You should consider – before you judge what I do – that I do not post everything I do concerning my development/selection methodology. I”

Sorry Daniel but I can only judge by what I read. If you have secret developments I have no way of knowing that and I suppose you have. As you see I was pretty open in sharing my thoughts, the results of many years of exposure to this area. My point is that incremental gains in speed are of little value to the community of traders because 80% of the time is spent on trading and thinking about systems and trading. As I said I do not care if Kantu takes 2 days or even 4 days to get results as long as they are good.

“The manual, software page, etc are filled with warnings saying that Kantu can lead to loses if the people who use it do not understand what they are doing. ”

Of course this is true provided that Kantu knows what it is doing, something that I have doubts about having read previous blogs.Otherwise this is just a reversal of blame on the user. My point is that users of such software can do very little to minimize DMB because of the issues previously discussed.

If you properly follow my suggestion by calculating DMB on a large number of random series you will see that it just ca’t go away. It is impossible as it is inherent in the process. Therefore the incremental gains in speed are irrelevant and somewhat alienating.

Hi Daniel,

I don’t mean it wrong, but totally agree with Bob. Sometimes I have the feeling that your research is going in the opposite direction from trading i.e. backwards. Why do you generate pseudo random strategies, that have been succesfull in last years, when you know that every monment on the market is unique and won’t repeat in the future. So your tradings strategies cannot be sucessfull for longer then few months.

So this contribution is only to remind you what trading is all about: support & resistances (supply, demand), trends and volatility (momentum) & money mangement, psychology and discipline.

Best regards,

JojoW

P.S.: You don’t need rocket science to profit in markets.

I agree with JojoW and after many years of trying NNs, GPs and almost everything along these lines you can imagine, spending thousands on programming fees and development, I came to realize that a simple s&r approach with sound risk management and maybe a little of averaging up or down cannot be beaten.

Daniel, there is a GP engine going for less than $200 some people use to develop software and sell it for tens of thousands of $$$ to ignorant people who do not know the difference between gold mining stocks and data mining bias: http://www.rmltech.com/

Markets change constantly. I think in a previous post you admitted that. But then you are trying to get faster ways of creating “pseudo random systems” as JojoW said. You have to answer first the following question for your research project to make sense: what is the probability that my best performing system generated by my data-mining method is random. Unless you have a specific procedure that is well-qualified to answer this question, you are probably wasting your time. This is of course friendly advice. I by no means try to undermine your good work but to share my experience and prevent others from paying for the same mistakes I made once.

Hi Daniel,

It’s really astonishing the speed at which you deliver new tools aiming to increase chances to profitability.

Many times i tried to you to proceed at a slower pace to let the whole asirikuy community to digest all the matter, giving you at the same time the chance to consolidate and fine tune the results obtained.

But the question is: will the newcomer PKantu send to retirement the short-lived elder brother Kantu ?

Best regards,

Rodolfo

Hi Rodolfo,

Thanks a lot for your post :o) You can thank Jorge for encouraging and helping me write this new PKantu implementation! Many software developments are spear-headed by the desire of some Asirikuy members to test new ground. As you know I love to encourage new tests and developments so we always end up developing new tools for our community.

Regarding the old Kantu, PKantu is still a month or so away (it is still in the very early development phase) so the old elder brother will be able to live for at least several more months. Even if I stop active development of the old Kantu – which may eventually happen – it will remain supported within our community. Thanks again for your comment Rodolfo :o)

Best Regards,

Daniel

Amazing is all I can say! Keep up the great work Daniel, there is simply no one else doing this like you and at that pace in the trading industry! Amazing!

Hi Daniel,

Fantastic step forward, well done! If you ever need more power, Amazon EC2 offers GPU instances. Theoretically you can create n number of GPU instances and run the system generation on that cloud to reduce the generation time to roughly t/n

How much is each GPU instance using Amazon cloudfront? are they charging by the bandwidth (GB), electricity usage (Kilowatt hour), etc?