Through the many posts within the “Neural Networks in Trading” series we have discussed important aspects of neural network design and other matters relevant for the building of algorithmic trading strategies. Today we’re going to be talking about what I believe is the most fundamental problem with the creation of Neural Network based strategies, a problem which proves in fact that the design of a neural network to predict the market in a very accurate way might simply not be possible. Now this doesn’t imply that the building of profitable systems cannot be achieved using networks – something we have proved quite conclusively with Sunqu at Asirikuy – but that having systems which predict the market in very accurate ways to generate unlimited amounts of wealth isn’t actually a likely reality. If you haven’t read my previous posts about neural networks in trading please check them out before proceeding :o)

Neural networks are very efficient algorithmic engines built from mathematical functions which can tell us the underlying mathematical properties behind any non linear series of data points. The main idea behind neural networks is to discover complex and inherently hidden relationships within data which can later – and hopefully – be used to draw useful predictions. As I have explained extensively through other articles this is especially well suited for systems that are inherently deterministic (such as the corrosion ratios of metal samples with different composition) but it starts to fail as the chaos within systems increases. But why does this happen ? Why does accurate prediction of how the market will change turn up to be so hard ? Why isn’t the “discovery” of fundamental mathematical relationships enough to forecast tomorrow’s EUR/USD close with a very high accuracy rate ?

–

The problem lies in the inherent contradiction between complexity and prediction accuracy. When we attempt to model the markets using a neural network approach we need to get a given degree of accuracy within our training which should be similar to the level of accuracy desired within the final prediction (if we rely on work related to deterministic systems). The more you want your prediction to be accurate the more functions (neurons) you need to use and the more neurons you use the more complex the network gets (meaning that it can make more complex mathematical relationships). When training a network to predict the EUR/USD daily closes using past close values I could get an accuracy of +/- 1 pip within the training set if I use a neural network which uses data for the past 60 candles and at least 2 hidden layers with these layers having 240 neurons each. In the end the neural network has an enormous structure which enables it to train with a high degree of accuracy to reach very precise results within the training period.

What happens when you attempt to draw predictions ? Complete disaster, you don’t anywhere near the supposed accuracy you should have and although your neural network trains to the past in an almost perfect fashion it has no capability whatsoever to predict the future. Is the network “curve fitted” ? Many would define the problem this way but I believe this is the wrong way of calling it since the problem is not that the fit of the network is “too tight” but that the network is too complex. You can find evidence for this if you repeat the experiment again by retraining the network, suddenly you notice that your predictions are now very different from the ones made before. Why is this the case if you have run your network with the exact same topology and data before ? The problem is that the amount of solutions which can fit the data is too large and therefore you will find N solutions from which all will be a valid prediction during the training set but many will not have caught the real relationships with the past data.

What you have when you can get very accurate predictions through trading is a Neural Network which describes the problem perfectly in one of the immense array of ways in which such a network can. What happens is that when you increase network complexity to get better predictions you are also increasing the possible solutions that yield the same answer and eventually (and actually quite easily) this number becomes so large that the capacity to predict anything is completely lost. You would think that building a committee here would work – to get results from various networks and then do what most say – but the fact is that this becomes implausible for large networks because the committee sizes required to cover a good size of the solution space are also tremendously large.

Another bad problem is that – even if you could carry out this large set of simulations (perhaps more than 100K) -you also have the inherent problem that the precision of the processor can invariably affect the solution of the neural network to the point where two successive runs of the same neural network with the same weights yield different results. Financial systems have an inherently significant chaotic component and when you’re aiming for very accurate predictions even minute changes in the initial weights of the neural networks can change future predictions since sometimes minute variations within the functions can cause this effect. Even if the processor precision achieves extremely high accuracy, imprecision within the processor’s tolerance can cause problem for this type of simulation.

–

–

However perhaps the biggest issue is that even if you had millions of solutions depicting all possible mathematical relationships that yielded accurate training results and you made a committee to make a decision based on what most neural networks would say there is still a probability that the “true” underlying basis that yields predictive power is a minority within the large set of solutions, therefore you don’t know which networks are right and which ones are wrong and you could only make a decision if a very large percentage of them agree (or if all of them agree). If you have a committee size which is very large then the probability of all networks reaching an agreement approaches zero quite fast because the paths that lead to the prediction have an inevitably gigantic amount of freedom due to the network’s innate complexity.

In essence the problem of system building with neural networks is that we can achieve very precise training results by using a higher level of NN complexity but while doing so we also greatly increase the inability we have to draw useful predictions from those relationships because “digging” into the neural network solution space (all possible solutions for the problem) becomes very difficult and complex. If you lower the level of complexity then your ability to make decisions increases (higher ability to explore the solution space) but your ability to predict values within training sharply decreases. In the end you are caught within a struggle between the complexity of solution making and the accuracy of training results. How do you solve this issue?

In order to tackle the problem of Neural Network complexity and training accuracy we need to consider the actual nature of the problem. We want a neural network to learn about some mathematical relations which lead to market inefficiencies but we want to retain the ability to make simple decisions without being blocked by the NN’s complexity. In order to achieve this you must think “outside the box” and learn how to get an NN which is simple to yield results that have a statistical edge that is sustained through time. A simple NN allows you to make decisions very easily (number of possible solutions is small) but the problem becomes finding an actual edge that works. When you have a complex network you know exactly what to trade but you cannot make a decision (for example you know that if you predict the close you would just enter the day in its direction) while if you have a network that doesn’t reach such a good fit in training you need to choose the inefficiency because the network won’t actually predict what you want it to very well (you need to explore the relationship between the network’s prediction and the actual predicted target).

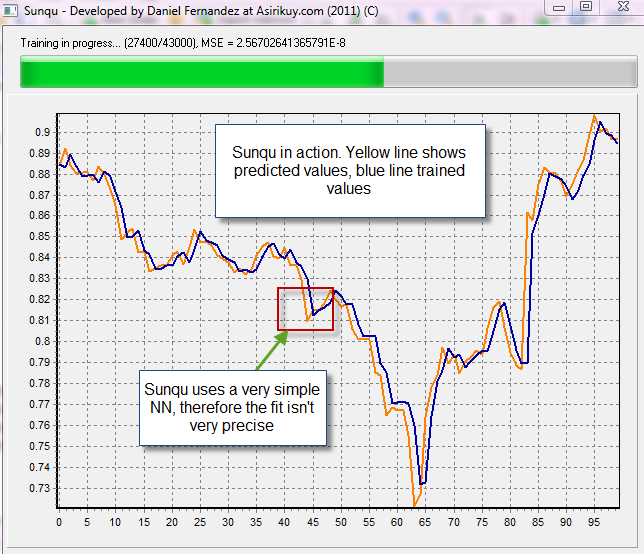

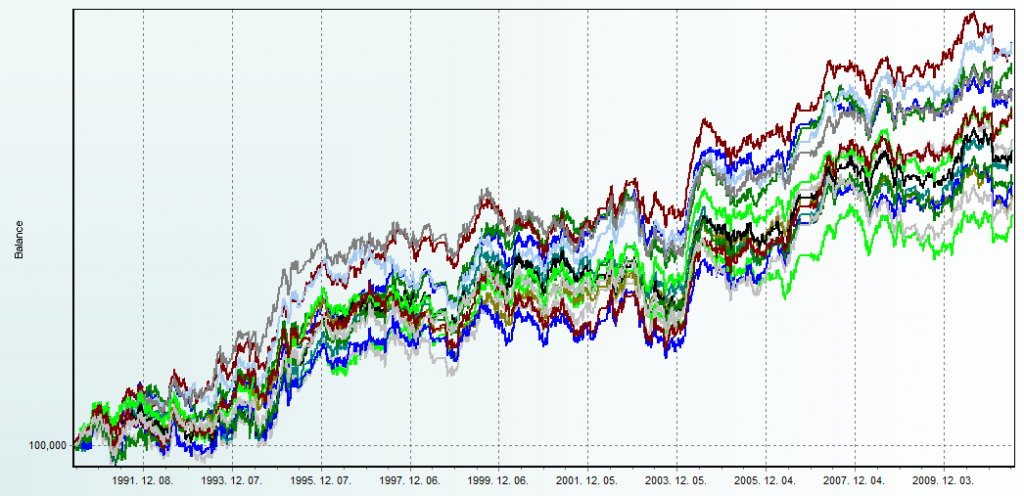

This is in essence what Sunqu does :o) This is the system we are developing in Asirikuy for trading using a neural network (already released for back and demo testing within the community) and the system actually uses a simple neural network which allows for limited solution space complexity but the inefficiency is actually exploited through a relationship I found between the network’s predictions and price variations. You’ll discover that the creation of neural network profitable systems is much more difficult than you would have thought at first because neural networks only seem to yield market inefficiencies whenever relationships are discovered between simple NN predictions and actual price variations and NOT when attempting to build a very complex NN to actually forecast some specific market property in a desired way. The relationships built by Sunqu’s neural network are very strong and durable with the network showing reproducible profitability on the EUR/USD and USD/CHF for the past 20 years (before 1999 on the EUR/USD the system was evaluated on DEM/USD data). This shows that neural networks can build profitable strategies but we still have a ton to understand about how the statistics of these systems work and what the best way to trade them live actually is (if we should trade very high committee values or simply many instances of the EA). Within the next few months we will further explore these aspects to trade Sunqu within Asirikuy – and future NN systems – in the best possible way (image above shows many different Sunqu backtests with the same parameters, the inherent variability of an NN EA is obvious but profitability is very highly reproducible).

That said the Hamuq project – geared at building a market Oracle for the forecasting of market wide daily closes- is also underway and making some very interesting progress in this regard. If you would like to learn more about neural networks and how to build trading strategies based on understanding and knowledge please consider joining Asirikuy.com, a website filled with educational videos, trading systems, development and a sound, honest and transparent approach towards automated trading in general . I hope you enjoyed this article ! :o)

great article Daniel!

In fact, a model is properly fit when its paramters are adapted to the nonrandom portion of price movement.

A model is improperly fit or overfit when its parameters are adapted to a random portion of price movement. The proportion of the random to nonrandom components of price action sets a natural limit to the predictability of a market.

Probably, increasing the complexity of a Neural Networks trading system, the overfit on random data is increased.

Umberto

Thanks for the article Daniel, always a pleasure to read your work :)

As seen with other Asirikuy systems, simplicity is usually the way to go regarding automated systems.

Another great article Daniel, and I agree by the way, don’t believe you’ll ever be able to get a neural net to consistently tell you with a large enough degree of accuracy the next day’s EUR/USD closing price based on the previous day’s data.

However, if you asked it to tell you if the price was likely to be higher or lower than it currently is one month from now based on the last 20 days data you might get somewhere I guess.

There is simply to much randomness in the market’s in a single day for any prediction based purely on mathematical equations to be tradeable, at least that is what my experience tells me anyway. I spent ages looking for a way to mechanically predict the next day’s price, I never found it.