If you want to search for trading systems using data-mining, you will soon face problems related with the computational intensity of the task at hand. Even simple logic spaces (like two price action based rules) can contain millions of potential system combinations and testing all of them is fundamental to the finding of strategies worth trading. However the finding of systems on real data is only the beginning of the problem, since finding whether these combinations arose due to data mining bias (DMB) or true historic inefficiencies demands even more computational power. Today I am going to talk about how we decided to tackle the problem of computational intensity within Asirikuy and how we have developed a cloud data mining implementation that allows us to search for new systems as a trading community, joining efforts to find systems that will benefit us as a whole, helping us better understand the way in which data mining works and enabling us to explore options that would otherwise be too expensive for any one individual to explore. I will start with a brief description of the problem at hand, following with our solution and the potential benefits it will bring us looking forward.

–

–

Why is data mining so expensive? Imagine you wanted to find a system that works on 25 years of daily data (fulfills some statistical preferences) and you want to restrict your searching space to systems that use 2 price action based rules (simple comparisons between OHLC data) which contain OHLC values with shifts between 1 and 50, with a step size of 2. You know you want to restrict risk per trade, so the system you want to generate must have a stoploss that can vary between 0.5 and 2 times the daily ATR with 0.5 steps. This simple set of options gives you almost 150 million possible systems which you would need to back-test in order to obtain some statistics and know whether the system is or isn’t worth considering for live trading. Using something like our initial kantu implementation (which takes approximately 3 milliseconds for each test) would give you approximately 5 days to complete the test. Using PKantu, our latest implementation which uses OpenCL and GPUs for back-testing, would take approximately 50 minutes (0.0056 milliseconds per test).

You would then think that PKantu solves the problem, because it allows us to obtain results fast, even for large logic spaces, but the issue here is also that in order to know whether a system is worth trading live, we also need to evaluate DMB. The evaluation of DMB involves the generation of many random data series using bootstrapping with replacement and the mining on this data, which means that you need to perform the above experiment N times, where N is the number of random data series you want to run tests on. Since you want your results to converge – reach a stable distribution of systems generated on random data – this usually implies generating systems across 50-100 data series, which increases computational costs considerably. Using the above example, examining DMB on PKantu (50 random data series), takes roughly 2 days of computational crunching.

If you’re looking to generate systems on lower timeframes or on larger logic spaces, then you’re looking at crunching periods of weeks or months in order to properly carry out an analysis, with no guarantee that results will lead to something to trade. On the other hand, if you have a trading community, performing individual data mining efforts is highly sub-optimal, since we are probably interested in similar things. If this is the case, then it makes a lot of sense to join computational power and focus on solving a set of problems that is relevant to the community as a whole, contributing to the finding and evaluation of new systems for live trading.

–

–

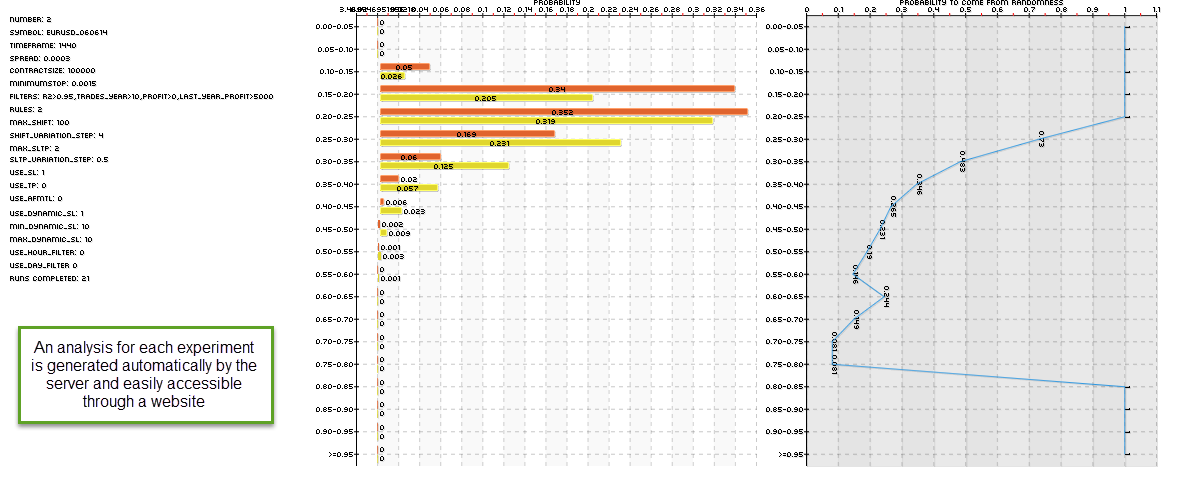

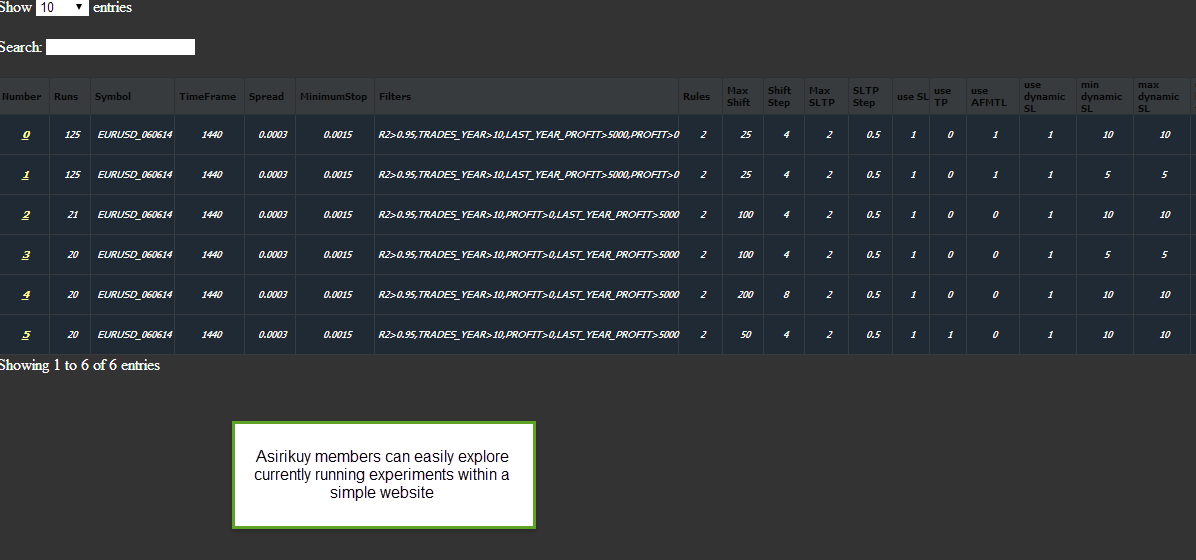

In this spirit we have developed a cloud data-mining interface which allows Asirikuy members to perform data-mining efforts that benefit the entire community. The server has a database implementation that contains all relevant experiments and the person wanting to contribute only needs to run a script that automatically obtains the experiment that needs execution and runs it on the person’s computer. Each person contributes with one system generation run on real or random data at a time and these results are then sent to the server, automatically appended and automatically analysed to generate results that can be easily seen and analyzed by the whole community. Since the analysis of DMB depends on the convergence of the distribution of systems generated on random data, each additional contribution helps refine the results and gives us new information. In this manner people don’t need to take the time to analyze/append/store/index their own results, but they can simply contribute raw computational power and the cloud environment we have created at Asirikuy does the rest.

In the end we can run things that are quite unfeasible to run for a single person, we can obtain results for many different experiments within just a couple of days (even if just 10 people are contributing!) and we can also join efforts to obtain results for a single experiment with a high computational cost (for example a logic space with 1800 million systems). The best thing is that the efforts are centralized and therefore we don’t have to perform the same experiments 20 times (which is what happens when everyone does things on their own) but experiments are performed once in a centralized manner and then everyone can benefit from the results. Furthermore, results are neatly organized within a database implementation, so it is very easy to obtain statistics for experiments.

This cloud data mining effort will be the corner-stone of the Asirikuy Trading System Repository which holds trading systems derived from data-mining (verified to represent true historic inefficiencies) and allows members to choose a portfolio that fits their own trading goals. Our system repository will be the topic of a future post :o). If you would like to learn more about data-mining and how you too can contribute to a cloud based data-mining effort please consider joining Asirikuy.com, a website filled with educational videos, trading systems, development and a sound, honest and transparent approach towards automated trading in general . I hope you enjoyed this article ! :o)

Impressive.

1.- Daniel, what data mine tool are you using right now to perform?

2.- Do you need some client part to download configs and run the test?

3.- If you use a client part, what OS are you using on that side?

Regards.

Hi Francisco,

Thanks for your comment :o) Let me answer your questions:

1. We’re using PKantu, a mining tool we developed using python and OpenCL. This allows us to mine using the power of GPU and/or CPU computing.

2. Yes, a client part is needed but configuration files and tests are ran automatically as directed by what the server requests.

3. The client runs in Windows, Linux or MacOSX.

I hope this answers your questions. Thanks again for posting,

Best Regards,

Daniel

Hi Daniel,

It’s been a while! Just want to drop by and say I think you guys are doing an outstanding job. pKantu is definitely a progressive development project in the right direction for algorithmic trading.

Work and life on my side has kept me waaay preoccupied, but I see myself contributing again soon as soon as things settle down.

Looking forward to see the live results of the fruit of pKantu.

All the best

Franco