Last year I wrote a post about how to retrieve account results using the Oanda RESTv1 API. Just by introducing your account ID and token within the script you could obtain a summary with your account’s current statistics. However with the advent of the new RESTv20 API from Oanda this script is now more or less obsolete – as it only works with the older API – and it therefore becomes important to introduce a new script to deal with this new account type. Today I want to talk about a new python script I designed for this purpose as well as some new features I have introduced to make the script more useful for those of you who have several accounts and want to be able to easily analyze them.

–

#!/usr/bin/python

# Daniel Fernandez, Jorge Ferrando

# https://asirikuy.com

# http://mechanicalForex.com

# Copyright 2017

import pandas as pd

import numpy as np

import time

import math

import ConfigParser, argparse, logging, zipfile, urllib, json

from time import sleep

import matplotlib.pyplot as plt

import matplotlib.dates as mdates

import qqpat

import requests

import datetime

import sys, traceback, re

def lastValue(x):

try:

reply = x[-1]

except:

reply = None

return reply

def main():

parser = argparse.ArgumentParser()

parser.add_argument('-t', '--token')

parser.add_argument('-a', '--account')

parser.add_argument('-e', '--environment')

parser.add_argument('-st','--start_date')

args = parser.parse_args()

account_id = args.account

token = args.token

environment = args.environment

if args.start_date != None:

try:

start_date = datetime.datetime.strptime(args.start_date, '%Y-%m-%d')

except:

print "Start date not supplied in valid format 'YYYY-MM-DD'"

quit()

if account_id == None or token == None or (environment not in ["demo", "real"]):

print "Please define a RESTv2 token (-t), environment (-e) as 'demo' or 'real' and a RESTv2 account (-a)"

quit()

dateFormat = mdates.DateFormatter("%d/%m/%y")

transactions = []

headers = {

'Content - Type': 'application / json',

'Authorization': 'Bearer {}'.format(token),

'Accept-Datetime-Format': 'UNIX'

}

if environment == "real":

api_url = "api-fxtrade"

else:

api_url = "api-fxpractice"

if start_date != None:

r = requests.get('https://' + api_url + '.oanda.com/v3/accounts/{}/transactions?from={}.0&pageSize=1000'.format(account_id, start_date.strftime('%s')), headers=headers)

print 'https://' + api_url + '.oanda.com/v3/accounts/{}/transactions?from={}.0&pageSize=1000'.format(account_id, start_date.strftime('%s'))

else:

r = requests.get('https://' + api_url + '.oanda.com/v3/accounts/{}/transactions?pageSize=1000'.format(account_id), headers=headers)

for page in r.json()['pages']:

r = requests.get(page, headers=headers)

transactions.extend(r.json()['transactions'])

returns = []

dates = []

for transaction in transactions:

if transaction['type'] == 'ORDER_FILL' :

returns.append(float(transaction['pl']) / (float(transaction['accountBalance']) - float(transaction['pl'])))

dates.append(datetime.datetime.utcfromtimestamp(float(transaction['time'])))

elif transaction['type'] == 'DAILY_FINANCING':

returns.append(float(transaction['financing']) / (float(transaction['accountBalance']) - float(transaction['financing'])))

dates.append(datetime.datetime.utcfromtimestamp(float(transaction['time'])))

else:

pass

s = pd.DataFrame({'profit': returns}, index=pd.DatetimeIndex(dates))

balance = (1 + s).cumprod()

total_return = 100*(balance['profit'].iloc[-1]-balance['profit'].iloc[0])/balance['profit'].iloc[0]

fig, ax = plt.subplots()

ax.plot(balance.index, balance['profit'])

ax.xaxis.set_major_formatter(dateFormat)

plt.xticks(rotation=45)

plt.show()

age = (balance.index[-1]-balance.index[0]).days

s = balance.resample('D').apply(lastValue)

s = s.pct_change(fill_method='pad').fillna(0)

m = balance.resample('M').apply(lastValue)

m = m.pct_change(fill_method='pad').fillna(0)

w = balance.resample('W').apply(lastValue)

w = w.pct_change(fill_method='pad').fillna(0)

try:

analyzer = qqpat.Analizer(s, column_type='return', titles=["profit"])

cagr = analyzer.get_cagr()[0]

sharpe = analyzer.get_sharpe_ratio()[0]

maxdd = analyzer.get_max_dd()[0]

except:

cagr = 0.0

sharpe = 0.0

maxdd = 0.0

avg_daily = np.asarray(s).mean()

avg_weekly = np.asarray(w).mean()

avg_monthly = np.asarray(m).mean()

print "Total Profit: {}%".format(total_return)

print "Account age: {} days".format(age)

print "Maximum DD: {}%".format(maxdd*100)

print "CAGR: {}%".format(cagr*100)

print "Sharpe: {}".format(sharpe)

print "Avg daily P/L: {}%".format(avg_daily*100)

print "Avg monthly P/L: {}%".format(avg_monthly*100)

print "Avg weekly P/L: {}%".format(avg_weekly*100)

##################################

### MAIN ####

##################################

if __name__ == "__main__": main()

–

The script above can help you retrieve account information from your RESTv20 API account. However the script does not work by directly introducing your Oanda credentials and account ID within the code but instead it allows for command line parameters that you can use to define what account you want to get, from when you want to get data and whether the account you want to get is from the “real” or “demo” server. In order to use the script you’ll need to install Python 2.7 plus the qqpat and requests libraries which you can easily install if you’re using Python 64 bit on Windows by first installing the Python C++ compiler for python and then simply issuing the command “python -m pip install qqpat requests”. On linux you can simply do “sudo pip install qqpat requests”.

Once you have all python libraries installed you can then proceed to use the script by saving it to a file (for example “oanda_script.py”) and then using the command “python oanda_script.py -a {account_id} -t {token} -e {real or demo} -st {starting date in YYYY-MM-DD}”. It is also worth noting that you do not need to define a starting date if you want to analyze the account from inception, in this case you simply should avoid defining the “-st” command line parameter and all the account data will be analyzed. Also note that the RESTv20 processing can be a bit slow if you’re requesting a lot of transactions – for example if you’re requesting several thousand transactions – so be patient when the script executes.

–

–

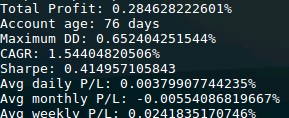

In the end you will be greeted with results like the ones above. You will be able to see some general account statistics including profit, maximum DD, CAGR, Sharpe and average daily, weekly and monthly returns plus a matplot lib generated graph showing you your account balance evolution as a function of time. It is also worth noting that the Oanda API won’t include transactions that have been closed in the current day, so at most the above will allow you to update your account trading results once every day. If you want you can also modify the script to get other statistics, the script uses the qqpat library to obtain system statistics so you can introduce additional qqpat function calls to get things like the Omega ratio, maximum drawdown length, average recovery, etc.

Since this script can be called from the command line with the account information as arguments it lends itself really well to the automation of your account analysis. You can simply create a script that calls the python analysis script once for each account you have with the appropriate arguments and in this manner you will be able to easily analyze all your account results in a matter of minutes. By using the starting date option you will also be able to avoid analyzing results that might be irrelevant to your trading, for example when you decide to test a different setup and you don’t care about the account’s results before that point in time.

–

–

The above script provides a needed update for the RESTv1 script I had posted before. Additionally not only does it implement support for the new RESTv20 API but it also implements command line arguments to get account results which makes the entire processing much easier when there are multiple trading accounts to analyze. If you would like to learn more about coding and how you too can create your own automated trading systems please consider joining Asirikuy.com, a website filled with educational videos, trading systems, development and a sound, honest and transparent approach towards automated trading.strategies.